Process automation, the Internet of Things and what IPV6 will mean to all of us

Figure 1: Two-wire, analog pressure transmitter. Courtesy of Endress+Hauser.

Digital communication technologies and applications have been a growing part of industrial markets since the early 1980s. The significant benefits are widely recognized. Throughout the same period, computer-based supply chain coordination and integration evolved, lending support to the globalization of commodity and finished goods markets.

As the second decade of the 21st century comes to an end, the outstanding trend may be that basic information technology is becoming more deeply embedded in machines, equipment and instruments, and making them part of the Internet of Things (IoT).

The modern process-control era began when analog electronic concepts replaced pneumatics. IoT will take us to the next stage.

The two-wire transmitter

For years industrial process control was based on conventional analog signals. Early replacements for pneumatic loops used voltage-based signals, such as 0-5 or 0-10 Volts. These worked, but voltage loops were susceptible to losses from cable resistance. Current loops proved more robust, and most industries settled on 4-20 milliamps (mA) signals.

A 4-20 mA signal is transmitted over two wires from an instrument (see Figure 1) to a host control or monitoring system, which then interprets the signal and responds accordingly. The system often sends another 4-20 mA signal to a valve, pump or other controlled device. This method of communicating is effective, but information sent by the device is simply a single measured value. The host system interprets the value, and its response is based on settings defined by the user.

A 4-20 mA analog approach to process control requires two wires from each device back to the host system. In this analog world the signal says nothing about the device, only about the process variable being measured. It is not known if the variable reading is correct or if the variable can be accessed unless the user is in direct contact with the loop.

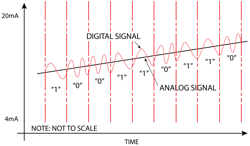

Figure 2. HART imposes a digital signal on the 4-20 mA output of a process transmitter. The digital signal can carry diagnostic and status information.

In the mid-1980s Rosemount developed and introduced the Highway Addressable Remote Transmitter (HART) communications protocol. A HART device has a Bell 202 signal superimposed over its basic analog 4-20 mA output (see Figure 2). By modulating the frequency of the signal, a series of ones and zeros are produced, providing a mechanism for transmitting more information.

HART supports bi-directional communication, which can provide data access between the field instrument and its master, normally the host system. HART allows for up to two masters: the host system and a second device such as a handheld communicator. It enables configuration of the device, can read the device’s main and secondary process variables, and delivers information about the health of the device.

HART’s breakthrough for users was in providing a method to access more than just a process variable from a field device. It was also compatible with the analog loops found almost universally in industrial processes. The "smart instrument" provided access to more than just a process variable.

The instrument was primarily used as a point-to-point operation but could also support multi-drop wiring topologies and multivariable field devices. However, working within the constraints of analog loops severely limited its communication speed, which made it unsuitable for real-time process control.

Digital moves into industrial

Since the mid-1980s, various manufacturers such as Foundation Fieldbus and Profibus PA introduced industrial process automation protocols for process control. These protocols threw out analog concepts entirely. Relieved of that limitation, their wiring connections (physical layers) gave industrial processes access to a wider range of information, including process variables and diagnostic information, delivered in near real-time.

Digital protocols such as these exceeded the capabilities of HART and facilitated constant two-way communication between the field instruments and the host system. This enabled monitoring device health indicators, and supported lifecycle and condition-based asset management using information contained in the transmitter and control system. Implementation of these digital protocols within instruments and host systems dramatically transformed the world of process instrumentation.

The protocols also removed the need for point-to-point wiring between every field device and the host system. Instead, multiple devices could be connected to network segments on a single cable while still providing fast two-way communications. Access to a vast amount of information about the field instrument led to a better understanding of the process and better control over these assets.

Dawn of the PC

At about the same time smart instruments and digital protocols were being introduced, the computer world was evolving. The IBM PC, originally offered as a consumer product in the early 1980s, proved it could be adapted for industrial monitoring. Companies began offering input/output (I/O) boards and human-machine interface (HMI) and supervisory control and data acquisition (SCADA) software so a PC could gather data from industrial field devices and display it on graphical interfaces. Soon, this off-the-shelf commercial product began taking significant market share in what had previously been a world of proprietary process control systems.

These proprietary process control systems were built on the suppliers’ equally proprietary digital communication systems. Accordingly, if a distributed control system (DCS) was purchased from Vendor A, the user could access the Vendor A communications systems — and maybe even its process transmitters and control valves. Users discovered how PC-based systems could perform the same task for much less cost while communicating over commercial networks, which ushered in the end of expensive HMI, DCS and networks based on proprietary hardware and unfamiliar operating systems.

As computerization was introduced, need arose for an information infrastructure. PC-based workstations began to appear on desktops, and old main-frame and mini-computer networks with dumb terminals were carted away. IT groups adapted to the new systems and found ways to facilitate the movement of information and keep it safe. The biggest drawback with these early systems was the lack of a universal networking protocol.

Enter Ethernet

On May 22, 1973, at the Xerox research center in Palo Alto, California, Bob Metcalfe wrote a memo describing a network system he invented and dubbed "Ethernet." It could interconnect advanced computer workstations, making it possible to send data from one to another, as well as to high-speed laser printers. Ethernet was named after the luminiferous ether as an "omnipresent, completely passive medium for the propagation of electromagnetic waves." The system provided business and consumers an efficient way to transfer data across networks, setting a global standard.

Ethernet was introduced commercially in 1980 and standardized in 1983, about the time Rosemount was launching HART. Ethernet began to be used for office networks to interconnect computers, fax machines, printers and servers. It also helped connect systems to the newly created Internet.

In the industrial market, automation vendors began to experiment with connecting their control system platforms via Ethernet, but early on it did not deliver enough determinism. As a result, the digital communication protocols discussed earlier were adopted for device-level networks.

During those early years, Ethernet came under constant attack by proponents of other networks. They said Ethernet was not fast enough, lacked determinism, and could not be sufficiently secured for industrial use, but the system has overcome every argument and attack over the past 30 years. Various companies have found ways to add improvements to make the basic platform more suitable to the needs of industrial networks.

Figure 3. Digital Transmitter with EtherNet/IP communications

Names such as Profinet, EtherCat and Modbus TCP entered the industrial lexicon. EtherNet/IP was developed by Rockwell Automation in the 1990s, and it combined the advantages of the proven DeviceNet and ControlNet industrial networks with the Internet Protocol suite and IEEE 802. The result was an Ethernet variant capable of supporting real-time monitoring and control when used in conjunction with smart EtherNet/IP instruments (see Figure 3).

Today, Ethernet has evolved with improved speed, determinism and security to meet the needs of industrial markets. Its networks are moving down from enterprise levels to individual controllers in the field. Adoption at the field device level has been slower since Ethernet communication has been considered too overhead intensive and costly for a single instrument or actuator, but this is changing as the cost of Ethernet hardware continues to plummet.

IP at the device

For the most part, users and vendors have stuck with HART or other simpler digital protocols, particularly for relatively simple instruments. However, with lower costs for sophisticated device transmitters and the increasing ubiquity of Ethernet overall, EtherNet/IP is now beginning to move down to individual field devices and supplant other approaches. The following factors contribute to Ethernet’s advantages over conventional analog and digital device networks:

- Easier connectivity to a variety of host systems

- Communication with hosts simultaneously

- Instant familiarity to anyone with Ethernet experience

- Enabling use of conventional Ethernet tools and technologies possible

- Use of quality of service to prioritize network traffic possible

- Simple Network Management Protocol to monitor and manage the network possible

- More network topology options available when switches are deployed

- Stronger support for wireless data transmission

- Better security through standard Ethernet tools

- Economies of scale promise future gains outpacing Fieldbus

- Easier migration of information up to the management information system

- Ability to be supported by the IT infrastructure organization

Once Ethernet is used to communicate across all phases of an industrial process, information can be aggregated for productivity gain. To see how this works, users must understand some key points about the Internet, Web browsers and most importantly, the IoT. The IoT is the next major change in digital technology. It will bring information from smart devices at their point in the process directly to anyone, anywhere, anytime.

IT & the World Wide Web

The World Wide Web emerged into the public consciousness during the 1980s and 1990s as a means to use the Internet to read and write information among computers. Communicating over the Internet required a Web browser, and a software application for retrieving, presenting and traversing information resources on the web.

In 1990 Tim Berners-Lee developed both the first Web server and the first Web browser, WorldWideWeb, which was later renamed Nexus. Many others were soon developed, with Marc Andreessen’s 1993 Mosaic (later named Netscape), being particularly easy to use and install and often credited with sparking the Internet boom of the 1990s. Today major Web browsers include Firefox, Internet Explorer, Google Chrome, Bing and Safari.

The majority of time the Internet is accessed through a Web browser. This modality allows users to navigate and interact across the Web with ease, making it simple to communicate, learn and perform transactions. A Web browser relies on an Internet Protocol (IP) address, which provides a key link to the source of the data on the Internet. Every Web page has a unique IP address that tells the browser where to go.

Today, with more than 90 percent of the Earth’s land surface covered by wireless service, the advent of smart devices has enabled access to audio, text and the Internet virtually anywhere in the world. The Internet has historically been created by people, for people and about people.

In other words, nearly all of the roughly 50 petabytes of data available on the Internet were first captured and created by human beings by typing, pressing a record button, taking a digital picture or scanning a bar code. The Internet of People changed the world, but a new Internet emerging will again change the world. This new Internet is about connecting "things" to people and host systems.

Things talking to machines

The term Internet of Things was coined by British entrepreneur Kevin Ashton in 1999. With the IoT, virtually any powered device on the face of Earth can be connected through the Internet. This increases the possibility of extensive communications among devices, machines, host systems and people.

The IoT is built on cloud computing and networks of data-gathering devices. It is mobile and virtual and has an instantaneous connection. Eventually, this new Internet will transform our lives, making everything in our lives "smart," from how people are transported to how they manage their healthcare.

Machine entities can now share their information with other machine entities more easily, in support of decisions based on actionable information. These entities, equipped with smart sensors and instrumented to communicate, can sense and respond while interacting with a larger computer environment. What are the mechanics of this communication and what does it mean for our industrial processes?

Smart sensors

Most physical industrial measurements are analog in nature — a numerical value on a scale. These fundamental measurements are digitized and sent out through an Ethernet-based network. For example, Memosens digital technology used in analytical sensors connected to instruments and analyzers offers a wealth of information available through Ethernet, giving it the ability to be part of the Internet of Things.

The analytical instrument uses a probe with a sensor communicating with a transmitter through a digital link. Traditionally, this type of process sensor would have no abilities itself beyond sending a signal to the transmitter.

Figure 4. Memosens digital inductively-coupled sensor connection

However, advances in electronics now allow some of those functions to be moved closer to the actual sensor. Memory in the probe head (see Figure 4) allows it to be a self-contained smart device capable of holding calibration and manufacturing information and data on the sensor’s operation. Because this data is held in the sensor, it can be managed independently at a central location.

The sensor is still coupled to a transmitter in the field, but calibration can be handled independently in a shop or lab. Using a software program, all the information concerning the sensor’s lifecycle can be stored in a central database and accessed at any time. This approach dramatically reduces process downtime and allows for complete lifecycle management of the smart instrument.

Data from a Memosens-capable instrument can be communicated to a host system using the protocols already mentioned. When using Ethernet communication, data access is more readily available at the management level. Regardless of which digital protocol is used, access and control of individual measurement systems can be accomplished through an IP-addressable web server for remote access.

The smart instrument thus contributes to the Internet of Things by reporting information about the process to which it is connected. With remote access to a field instrument and all its information, operators have a mechanism to enhance maintenance and gain data valuable to improved process control. A smart instrument’s IP-addressable Web page offers the ability to access the device at any time, from anywhere over the Internet.

The instrumentation world is populated with similar smart instruments able to measure multiple process variables. In addition to measured variable information, they can also transmit status and diagnostic information, detect possible problems, verify their own accuracy and allow for in-situ calibration.

However, the number of smart devices of all types and in all applications, including the industrial world, is growing exponentially, leading to a traffic jam of IP addresses.

IPv4 versus IPv6

As previously mentioned, an IP address provides a key link to a specific source of data on the Internet. Accordingly, every Web page and connected device has a unique IP address. Internet Protocol Version 4 (IPv4) was the first publicly used IP version. It was developed as a research project by the Defense Advanced Research Projects Agency, a U.S. Department of Defense agency, before becoming the foundation for the Internet and the Internet.

IPv4 includes an addressing system using 32-bit numerical identifiers. These addresses are typically displayed in quad-dotted notation as decimal values of four octets, each in the range 0 to 255, or 8 bits per number. Altogether, IPv4 provides capability for 4.3 billion addresses.

Internet Protocol version 6 (IPv6) is the most recent version and provides an identification and location system for computers on networks and lays out routes for traffic across the Internet. IPv6 was developed by the Internet Engineering Task Force (IETF) to replace IPv4, as it is exhausting all its possible addresses.

Every device on the Internet is assigned an IP address for identification and location definition. With the rapid growth of the Internet after commercialization in the 1990s, it was only a matter of time until all those addresses would be used. By 1998, the IETF had formalized the successor protocol. IPv6 addresses are represented as eight groups of four hexadecimal digits with groups separated by colons, such as 2001:0db8:85a3:0042:1000:8a2e:0370:7334, but methods to abbreviate this full notation exist.

IPv6 uses a 128-bit address, or approximately 3.4×1038 addresses, or more than 7.9×1028 times as many as IPv4’s mere 4.3 billion. To put this in another perspective, IPv6 can provide approximately 5×1028 addresses for each of the 6.5 billion people alive today.

Unfortunately, the two protocols are not interoperable which makes the transition complicated. However, several IPv6 transition mechanisms have been devised to permit communication between IPv4 and IPv6 hosts.

IPv6 provides other technical benefits in addition to a larger addressing space. In particular, it permits hierarchical address allocation methods to facilitate route aggregation across the Internet, and as a result it limits the expansion of routing tables. Use of multicast addressing is expanded and simplified, providing additional optimization for service delivery. Device mobility, security and configuration aspects have been considered in the design of the protocol.

Conclusion

Projections suggest that in 2023 each of us could be connected to 3,000 to 5,000 smart devices each day. The IoT is growing rapidly and will change the way products create value. Easy access to all this information will unleash a new wave in the value chain of manufacturing companies, changing the way data impacts human interaction. The Internet makes it possible, but it all starts with smart devices.

Steven Smith is the senior product marketing manager- analytical for Endress+Hauser USA. He is responsible for technology application, business development and product management over a range of analytical products. He obtained a BS degree from the University of Wisconsin and an MBA from the University of Colorado.

Endress+Hauser began U.S. operations in 1970 and is now one of the largest instrument manufacturers in the country.